Today on R&D World

Stravito’s new Assistant is a conversational insights companion advancing generative AI

Q-CTRL hires defense industry leader to expand business partnerships in US and UK

Chip manufacturing explodes with AI growth, in this week’s R&D Power Index

Additive manufactured cutting tool for precision machining is the R&D 100 winner of the day

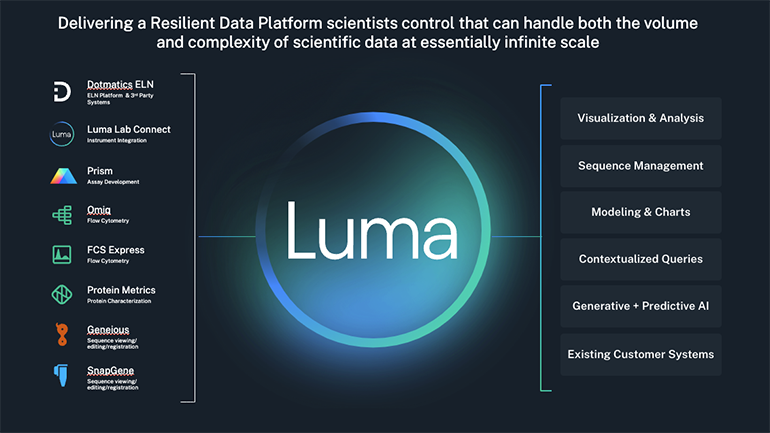

Luma Lab Connect unlocks value of lab data to accelerate scientific R&D decision-making

SuperNeuro: An Accelerated Neuromorphic Computing Simulator is R&D 100 winner of the day

Sanofi reprioritizing its R&D structure, in this week’s R&D Power Index

For drug discovery, comprehensive genomic analysis is essential

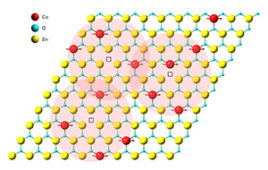

Olena Palasyuk, Ames National Laboratory R&D Technician of the Year

New Applied Biosystems TaqMan QSY2 probes enable multiplexing of research

Cerberus: Cybersecurity for EV Charging Infrastructure — R&D 100 winner of the day

The great lab of the future debate: Modular or singular approach?

Korean chip firm to build plant near Purdue, in this week’s R&D Power Index

R&D 100 moving gala awards ceremony to famed cemetery

Podcasts See More >

Physics See More >

A 32 Tesla superconducting magnet is focus of Episode 10 of R&D 100 – The Podcast

In this, the tenth episode of the R&D 100 Podcast, we examine the latest technology in superconducting magnets. These magnets have existed since the 1960s, but the field available has been limited by the properties of superconducting materials. So, VP, Editorial Director Paul J. Heney and Senior Editor Aimee Kalnoskas of R&D World spoke with…

Sponsored Content See More >

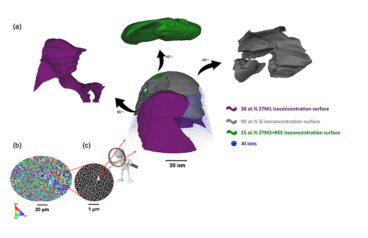

Ultra-Strong Aluminum Alloys for Additive Manufacturing

Despite attractive properties, such as low density, high strength, and corrosion resistance, aluminum alloys have experienced a slower adoption in additive manufacturing (AM) compared to steels, titanium alloys, and superalloys. To date, only a limited number of aluminum powders are available commercially for AM that are suitable for demanding, high-stress or high-temperature environments. Dr. Amir…

Life Science See More >

QDx Pathology Services adopts Proscia’s software to improve speed and precision

QDx Pathology Services, an independent anatomical, molecular, and clinical pathology laboratory serving medical professionals and facilities throughout the U.S., is working with Proscia, a provider of digital and computational pathology solutions, to innovate its practice. The laboratory has deployed Proscia’s software to enable its pathologists to work faster and more confidently. Digital pathology is modernizing…

Pramana joins Proscia to help laboratories realize more value from their pathology data

Overcoming the machine learning paradox: How the Allen Institute is scaling its computational research

ABB Robotics joins XtalPi to build intelligent automated laboratories

SpaceX returns vital life science research sponsored by the ISS National Lab

Nanotechnology See More >

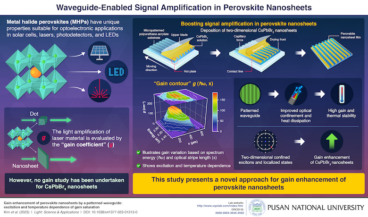

Pusan National University researchers boost signal amplification in perovskite nanosheets

Perovskite nanosheets show distinctive characteristics with significant applications in science and technology. In a recent study, researchers from Korea and the U.K. achieved enhanced signal amplification in CsPbBr3 perovskite nanosheets with a unique waveguide pattern, which enhanced both gain and thermal stability. These advancements carry wide-ranging implications for laser, sensor, and solar cell applications and…

Energy See More >

ABB and CERN identify energy-saving opportunity in heating and ventilation motors

ABB and CERN, the European Laboratory for Particle Physics, have identified significant energy-saving potential through a strategic research partnership focused on the cooling and ventilation system at one of the world’s leading laboratories for particle physics institutes in Geneva, Switzerland. The study included energy efficiency audits that helped to identify a savings potential of 17.4%…

New NPL neutron facility boosts UK’s nuclear energy, defense, and fusion research capabilities

Thermo Fisher Scientific sets 2030 renewable goal with solar developer ib vogt

U.S. DOE announces intent to issue $4.8M in secure communications development funding

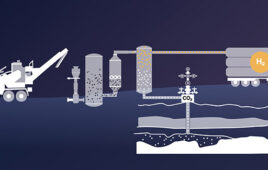

Mote kicks off second biomass to hydrogen project in Northern California

Chemistry See More >

PFAS — the collateral damage begins

I predicted collateral damage as regulators addressed PFAS. I was right. On December 1, the EPA effectively killed Inhance Technologies’ barrier packaging business. The press on the EPA action is pretty bifurcated. Most are taking a victory lap, cheering that a producer of PFAS will cease production. A minority ask what will replace the technology…

Material Science See More >

Materials informatics platforms enhance the innovation process in outsourced R&D Labs

In the fast-paced arena of research and development (R&D), where the pursuit of innovation is a driving force, the landscape is undergoing a transformative period. With expanding industries and heightened global competition among manufacturers, the crux of competitive advantage now resides in the ability of organizations to innovate with efficiency and timeliness. As organizations grapple…

PFAS — the collateral damage begins

Sustainable Ion Exchange Resin for Ultrapure Water Treatment, R&D 100 winner of the day

FixCarbon Technology: Carbon-negative bioplastics from afforestation, R&D 100 winner of the day

MaterialsZone partners with Kafrit Group, enhancing products and customer experience

Semiconductors See More >

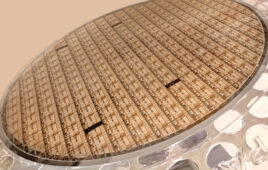

ASU and Deca lead North America’s first advanced fan-out wafer-level packaging R&D center

Arizona State University (ASU) and Deca Technologies (Deca), a provider of advanced wafer- and panel-level packaging technology, announced a groundbreaking collaboration to create North America’s first fan-out wafer-level packaging (FOWLP) research and development center. The new Center for Advanced Wafer-Level Packaging Applications and Development is set to catalyze innovation in the United States, expanding domestic…

Aerospace See More >

Two groundbreaking experiments planned for the upcoming solar eclipse

From SwRI Southwest Research Institute is leading two groundbreaking experiments — on the ground and in the air — to collect astronomical data from the total solar eclipse that will shadow a large swath of the United States on April 8, 2024. SwRI’s Dr. Amir Caspi leads the Citizen Continental-America Telescopic Eclipse (CATE) 2024 experiment,…

SpaceX returns vital life science research sponsored by the ISS National Lab

SwRI’s Dr. Alan Stern conducts space research aboard Virgin Galactic’s VSS Unity

Northern Germany’s expanding R&D ecosystem focuses on space